[ad_1]

In the current fast-paced world, using continuous integration and continuous deployment (CI/CD) workflows seems to be the only reasonable way to stay on top of software testing and stability. Numerous articles cover the basics of CI/CD, and in this article, I will focus on explaining how to implement three popular deployment strategies on the latest installment of OpenShift. To follow along with this article, you can download the latest stable version of OpenShift from GitHub (at the time of writing this article, I was using version 1.5.0 rc0) and run:

oc cluster up

This will takes a while the first time, because it will download several images needed to run the OpenShift cluster locally on your machine. Once this operation finishes you should see:

$ oc cluster up -- Checking OpenShift client ... OK -- Checking Docker client ... OK -- Checking Docker version ... OK -- Checking for existing OpenShift container ... OK -- Checking for openshift/origin:v1.5.0-rc.0 image ... ... -- Server Information ... OpenShift server started. The server is accessible via web console at: https://192.168.121.49:8443 You are logged in as: User: developer Password: developer To login as administrator: oc login -u system:adminYou can access your cluster from the command line (oc) or from your browser (https://localhost:8443/) with the above credentials.

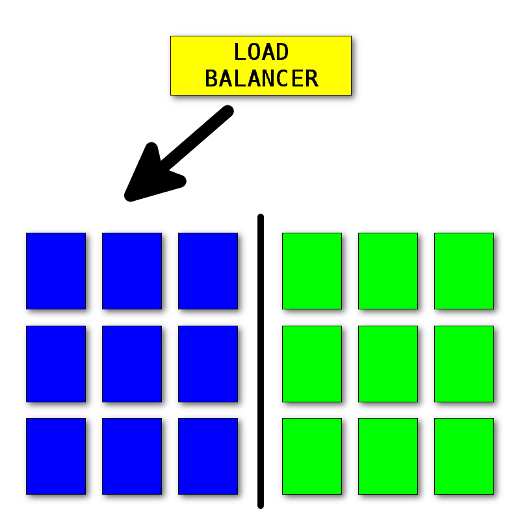

Blue-green deployment

Blue-green deployment, in short, is about having two identical environments, in front of which there is a router or load balancer that allows you to direct traffic to the appropriate environment:

Blue-green deployment

To illustrate this type of deployment, let’s create nine replicas of a blue application:

# this command creates a deployment running 9 replicas of the specified image oc run blue --image=openshift/hello-openshift --replicas=9 # this sets the environment variable inside the deployment config oc set env dc/blue RESPONSE="Hello from Blue" # this exposes the deployment internally in the cluster oc expose dc/blue --port=8080We will use a hello world application image provided by the OpenShift team. By default, this image runs a simple web server returning “Hello world” text, unless a RESPONSE environment variable is specified, in which case its value is being returned instead. For that reason, we are setting the RESPONSE value to easily identify our blue version of the application.

Once the application is up and running, we must expose it externally. For that we will use route, which also will be used as the switch between the two different versions of our application during the deployment process.

# this exposes the application to be available outside the cluster under # hello route oc expose svc/blue --name=bluegreenNow comes the time for performing the upgrade. We must create an identical environment as the one currently running. To distinguish both versions of our applications, we set RESPONSE to “Hello from Green” this time:

oc run green --image=openshift/hello-openshift --replicas=9 oc set env dc/green RESPONSE="Hello from Green" oc expose dc/green --port=8080 # this attaches green service under hello route, # created earlier but with the entire traffic coming to blue oc set route-backends bluegreen blue=100 green=0Both of our applications are currently running, but only blue is getting the entire traffic. In the meantime, the green version goes through all necessary tests (integration, end-to-end, etc.). When we are satisfied that the new version is working properly, we can flip the switch and route the entire traffic to the green environment:

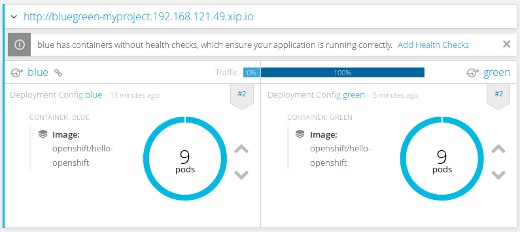

oc set route-backends bluegreen blue=0 green=100All of the above steps can be performed from the web console. Below is the screenshot showing that traffic is currently served by the green environment:

OpenShift web console, route preview after the switch to the green environment

Let me try to summarize the blue-green deployment strategy. Zero downtime is by far the biggest advantage of this approach, because the switch is almost instantaneous (which is close to ideal), causing users not to notice when their request was served by the new environment. Unfortunately, at the same time this can cause problems—all current transactions and sessions will be lost, due to the physical switch from one machine serving the traffic to another one. That is definitely something to take into account when applying this approach.

The other important benefit of this approach is that tests are performed in production. Because of the nature of this approach, we have a full environment for tests (again, an ideal world for developers), which makes us confident about the application working as expected. In the worst case, you easily can roll back to the old version of the application. One final disadvantage of this strategy is the need for N-1 data compatibility, which applies to all of the strategies discussed in later parts of this article.

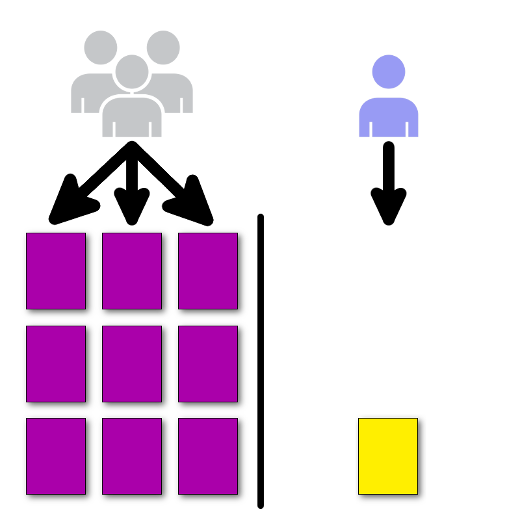

Canary deployment

Canary is about deploying an application in small, incremental steps, and only to a small group of people. There are a few possible approaches, with the simplest being to serve only some percentage of the traffic to the new application (I will show how to do that in OpenShift), to a more complicated solutions, such as a feature toggle. A feature toggle allows you to gate access to certain features based on specific criteria (e.g., gender, age, country of origin). The most advanced feature toggle I am aware of, gatekeeper, is implemented at Facebook.

Canary deployment

Let’s try implementing the canary deployment using OpenShift. First we need to create our application. Again we will use the hello-openshift image for that purpose:

oc run prod --image=openshift/hello-openshift --replicas=9 oc set env dc/prod RESPONSE="Hello from Prod" oc expose dc/prod --port=8080We need to expose our application to be accessible externally:

oc expose svc/prodThe newer version of the application (called canary) will be deployed similarly, but with only single instance:

oc run canary --image=openshift/hello-openshift oc set env dc/canary RESPONSE="Hello from Canary" oc expose dc/canary --port=8080 oc set route-backends prod prod=100 canary=0We want to verify whether the new version of the application is working correctly in our “production” environment. The caveat is that we want to expose it only to a small amount of clients—to gather feedback, for example. For that we need to configure the route in such a way that only a small percent of the incoming traffic is forwarded to the newer (canary) version of the application:

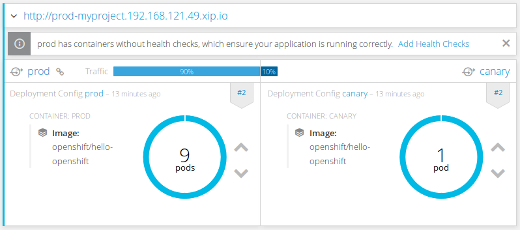

oc set route-backends prod prod=90 canary=10The easiest way to verify this new setup (as seen in the OpenShift web console screenshot below) is by invoking the following loop:

while true; do curl http://prod-myproject.192.168.121.49.xip.io/; sleep .2; done

OpenShift web console, route preview after sending small percentage of the traffic to the canary version

Note: There is a connection between how many replicas you have deployed and the percentage of the traffic that is being directed at each version. Because the service that is in front of the deployment works as a load balancer in combination with route division, that gives you the actual amount of traffic the application will get. In our case it is approximately 1.5%.

The biggest advantage of this approach is the feature toggle, especially when you have one that allows you to pick the target groups of your canary deployment. That, connected with decent user-behavior analytics tools, will give you a good feedback about the new features you are considering deploying to a wider audience. Like blue-green deployment, canary suffers from the N-1 data compatibility, because at any point in time we are running more than one version of the application.

There is nothing stopping you from having more than one canary deployments at any point in time.

Rolling deployment

Rolling deployment is the default deployment strategy in OpenShift. In short, this process is about slowly replacing currently running instances of our application with newer ones. The process is best illustrated with the following animation:

Rolling deployment

On the left, we have a currently running version of our application. On the right side, we have a newer version of that same application. We see that at any point in time we have exactly N+1 instance running. Noting that the old one is removed only when the new is has passed health checks is important. All these parameters easily can be tweaked in deployment strategy parameters in OpenShift.

???? put here rolling params screenshot ????

Figure 6. Rolling deployment parameters in OpenShift web console.

Let us then create our sample application:

oc run rolling --image=openshift/hello-openshift --replicas=9 oc expose dc/rolling --port 8080 oc expose svc/rollingOnce the application is up and running, we can trigger a new deployment. To do so, we will change configuration of the deployment by setting the environment variable, which should trigger a new deployment. This is because all deployments by default, have a ConfigChange trigger defined.

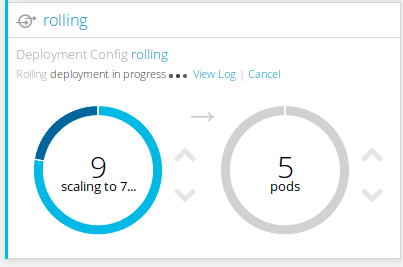

oc set env dc/rolling RESPONSE="Hello from new roll"The screenshot below was taken in the middle of the roll-out, but switching to OpenShift’s web console to see the process in action is best:

Rolling deployment in OpenShift web console

The major benefits of this approach include incremental roll-out and gradual verification of the application with increasing traffic. On the other hand, we are again struggling with N-1 compatibility problem, which is a major deal for all continuous deployment approaches. Lost transactions and logged-off users are also something to take into consideration while performing this approach. One final drawback is N+1 instances requirement, although this compared to blue-green demand for having an identical environment is easier to fulfill.

Conclusion

I’ll close with the best advice I was given: There is no one-size fits all approach. Fully understanding the approach and alternative options is important.

Additionally, that developers and operations teams work closely together when picking the right approach for your application is important.

Finally, although my article focused on each of these strategies by themselves, there is nothing wrong with combining them to have the best possible solution that best fits your application, as well as your organization and processes you have in place.

I will be presenting this topic as part of my three-hour workshop, Effectively running Python applications in Kubernetes/OpenShift, at PyCon 2017 (May 17-25) in Portland, Oregon.

If you have questions or feedback, let me know in the comments below, or reach out over Twitter: @soltysh.

[ad_2]

Source link