[ad_1]

An important but often underestimated part of software development is testing. Testing is, by definition, challenging. If bugs were easy to find, they wouldn’t be there in the first place. A tester has to think outside the box to find the bugs that others have missed. In many cases, understanding the business domain of an application is more crucial for effective testing, as is detailed knowledge of the application itself.

In open source projects, quality is typically addressed by contributors and coordinating architects. Tests of units, components, and services are often done effectively and automated well. This allows a project to move forward even when many contributions are made. Comprehensive automated testing with sufficient range and depth helps keep the product stable.

While some open source projects develop from accumulating contributions of dispersed people, DevOps oriented projects may follow a Scrum or Kanban approach that includes simultaneous development and release. This process also relies heavily on comprehensiveness of tests and their seamless automation. Whenever there is a new version (this can be as small as a check in of a single source file), tests should be able to verify that the system didn’t break. At the same time, those tests shouldn’t break themselves either, which for UI-based tests is not trivial.

The testing pyramid, proposed by Mike Cohn in his book, Succeeding with Agile, positions the UI as the smallest part of testing. Most of the testing should focus at the unit and service or component levels. It makes it easier to design tests well, and automation at unit or component/service level tends to be easier and more stable.

I agree that this is a good strategy. However, from what I’ve observed on various projects, the UI testing remains an important part. In the web world, for example, the availability of techniques like Ajax and AngularJS allow designers to create interesting and highly interactive user experiences in which many parts of the application come together under test. An ultimate example of UI right web applications are single-page applications, where all or much of the application functionality is presented to users in a single page. The complexity of a UI can rival that of the more traditional client-server applications.

I therefore like to leave some more room in the top of the picture, making it look like this:

Even for UI automation, the technical side can be fairly straightforward. There are simple open source tools like Selenium, and more comprehensive commercial tools like our own TestArchitect that can take care of interfacing with the UI, mimicking the user’s behavior toward the application under test. Tests through the UI are often mixed with non-UI operations as well, such as service calls, command line commands, and SQL queries.

The problems with UI tests come in maintenance. A small change in a UI design or UI behavior can knock out large amounts of the automated tests interacting with them. Common causes are interface elements that can no longer be found or unexpected waiting times for the UI to respond to operations. UI automation is then avoided for the wrong reason: the inability to make it work well.

Let me describe a couple of steps you can take to alleviate these problems. A good basis for success automation is test design. How you design your tests has a big impact on their automation. In other words, successful test automation is not as much a technical challenge as it is a test design challenge. As I see it, there are two major levels that come together in a good test design:

- Overall structure of the tests

- Design of individual test cases

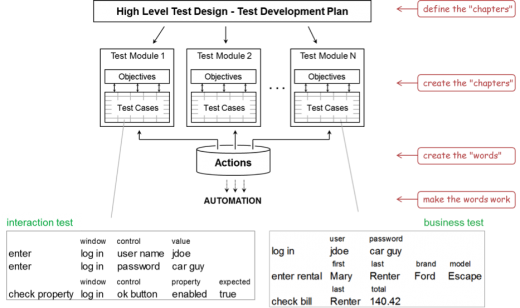

For the structure of tests we follow a modularized approach, which is a similar approach to how applications are designed. Tests cases are organized in test modules. Think of them like the chapters in a book. We have some detailed templates for how to do that, but at the very minimum you should try to distinguish between business tests and interaction tests. The business tests look at the business objects and business flows, hiding any UI (or API) navigation details. Interaction tests look at whether users or other systems can interact with the application under test, and consequently care about UI details. The key goal is to avoid mixing interaction tests and business tests, since the detailed level of interaction tests will make them hard to understand and maintain.

Once the test modules have been determined, they can be developed whenever it is convenient. Typically business tests can be developed early, because they depend more on business rules and transactions than on how an application implements them. Interaction tests can be created when a team is defining the UIs and APIs.

Another step that is effective is to use a domain language approach like Behavior Driven Development (BDD) or actions (keywords). In BDD, scenarios are written in a format that comes close to natural language. “Actions” are predefined operations and checks that describe the steps to be taken in a test. In our Action Based Testing (ABT) approach, they’re written in a spreadsheet format to make them easier to read and maintain than tests scripted in a programming language. Since I noticed that actions are more concrete and easier to manage than sentences, I created a tool to flexibly convert back and forth between the two formats, combining the best of both worlds. You can read more on BDD and actions in an article I wrote for Techwell Insights.

This picture gives an overview of how testing is organized in ABT, with the test modules and the actions in them. Note the difference in interaction tests and business tests. The automation focuses exclusively on automating the actions.

One other major factor to define automation success is known as testability. Your application should facilitate testing as a key feature. Agile teams are particularly well suited to achieving this, since product owners, developers, QA people, and automation engineers cooperate. However, open source projects do not necessarily have such teams, and the product ownership will have to define testability.

For more on testability, check out this article I wrote for TechWell Insights as well. The main aspects of testability, as described there, are:

- Overall design of the application (with clear components, tiers, services, etc.)

- Specific features (like API hooks or “ready” properties to help in timing, clear and unique identifying properties for UI elements, and white box access to data and events before they’re even displayed)

Automation can be challenging, especially via the UI. However, it cannot be avoided—nor should it be—because it is difficult. Cooperation between all participants in a project can lead to good results that are stable, maintainable, and not inefficient to achieve.

[ad_2]

Source link