[ad_1]

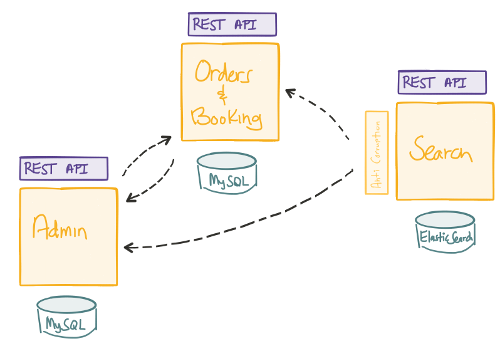

In this article, I’m going to explore perhaps the hardest problem when creating and developing microservices: your data. Using Spring Boot/Dropwizard/Docker doesn’t mean you’re doing microservices. Taking a hard look at your domain and your data will help you get to microservices. (For more background, read my blog series about microservices implementations: Why Microservices Should Be Event Driven, 3 things to make your microservices more resilient, and Carving the Java EE Monolith: Prefer Verticals, not Layers.)

Of the reasons we attempt a microservices architecture, chief among them is allowing your teams to be able to work on different parts of the system at different speeds with minimal impact across teams. We want teams to be autonomous, capable of making decisions about how to best implement and operate their services, and free to make changes as quickly as the business may require. If we have our teams organized to do this, then the reflection in our systems architecture will begin to evolve into something that looks like microservices.

To gain this autonomy, we need to shed our dependencies, but that’s a lot easier said than done. I’ve seen folks refer to this idea in part, trivially, as “each microservice should own and control its own database, and no two services should share a database.” The idea is sound: Don’t share a single database across services, because then you run into conflicts, such as competing read/write patterns, data-model conflicts, and coordination challenges. But a single database does afford us a lot of safeties and conveniences, including ACID transactions, single place to look, it’s well understood (kind of?), one place to manage, and so on.

When building microservices, how do we reconcile these safeties with splitting up our database into multiple smaller databases?

First, for an enterprise building microservices, we need to make the following clear:

- What is the domain? What is reality?

- Where are the transactional boundaries?

- How should microservices communicate across boundaries?

- What if we just turn the database inside out?

What is the domain

This seems to be ignored at a lot of places, but is a huge difference between how internet companies practice microservices and how a traditional enterprise may (or may fail because of neglecting this) implement microservices.

Before we can build a microservice and reason about the data it uses (produces/consumes, etc.), we must have a reasonably good understanding about what that data represents. For example, before we can store information into a database about “bookings” for our Ticket Monster and its migration to microservices, we need to understand what is a booking. As with your domain, you may need to understand what is an account, or an employee, or a claim, etc.

To do that, we need to dig into what is it in reality? For example: What is a book? Think about that, as it’s a fairly simple example. How would we express this in a data model?

Is a book something with pages? Is a newspaper a book? (It has pages.) Maybe a book has a hard cover? Or is not something that’s released/published every day? If I write a book (which I did—Microservices for Java Developers), the publisher may have an entry for me with a single row representing my book. But a bookstore may have five of my books. Is each one a book? Or are they copies? How would we represent this? What if a book is so long it has to be broken down into volumes? Is each volume a book? Or all of them combined? What if many small compositions are combined together? Is the combination the book? Or each individual one? Basically, I can publish a book, have many copies of it in a bookstore, each one with multiple volumes.

So then, what is a book?

The reality is there is no reality. There is no objective definition of what is a book with respect to reality, so to answer any question like this, we have to know: Who’s asking the question, and what is the context?

We humans can quickly—and even unconsciously—resolve the ambiguity of this understanding because we have a context in our heads, in the environment, and in the question. But a computer doesn’t. We need to make this context explicit when we build our software and model our data. Using a book to illustrate this is simplistic. Your domain (an enterprise) with its accounts, customers, bookings, claims, and so on is going to be far more complicated and far more conflicting/ambiguous. We need boundaries.

Where do we draw the boundaries? The work in the Domain Driven Design community helps us deal with this complexity in the domain. We draw a bounded context around entities, value objects, and aggregates that model our domain. Stated another way: We build and refine a model that represents our domain, and that model is contained within a boundary that defines our context. And this is explicit. These boundaries end up being our microservices, or the components within the boundaries end up being microservices, or both. In any case, microservices is about boundaries and so is DDD.

Our data model—how we wish to represent concepts in a physical data store (note the explicit difference here)—is driven by our domain model, not the other way around. When we have this boundary, we know (and can make assertions) about what is correct in our model and what is incorrect. These boundaries also imply a certain level of autonomy. Bounded context A may have a different understanding of what a book is than bounded context B. For example, maybe bounded context A is a search service that searches for titles where a single title is a book; maybe bounded context B is a checkout service that processes a transaction based on how many books (titles+copies) you’re buying, and so on.

You may stop and say, “Wait a minute. Netflix doesn’t say anything about Domain Driven Design, and neither does Twitter nor LinkedIn. Why should I listen to this about DDD?”

Here’s why:

“People try to copy Netflix, but they can only copy what they see. They copy the results, not the process.” Adrian Cockcroft, former Netflix Chief Cloud Architect

The journey to microservices is just that: a journey. It will be different for each company. There are no hard and fast rules, only trade-offs. Copying what works for one company because it appears to work at this one instance is an attempt to skip the process/journey and will not work. Your enterprise is not Netflix. In fact, I’d argue that for however complex the domain is at Netflix, it’s not as complicated as it is at your legacy enterprise. Searching for and showing movies, posting tweets, updating a LinkedIn profile, and so on are all a lot simpler than your insurance claims processing systems. These internet companies went to microservices because of speed to market and sheer volume/scale. (Posting a tweet to Twitter is simple. Posting tweets and displaying tweet streams for 500-million users is incredibly complex.)

Enterprises must confront complexity in both the domain as well as scale. So accept that this is a journey that balances domain, scale, and organizational changes. The journey will be different for each organization.

What are the transactional boundaries?

Back to the story. We need something like domain driven design to help us understand the models we’ll use to implement our systems and draw boundaries around these models within a context. We accept that a customer, account, booking, and so on may mean different things to different bounded contexts. We may end up with these related concepts distributed around our architecture, but we need a way to reconcile changes across these different models when changes happen. We need to account for this, but first, we must identify our transactional boundaries.

Unfortunately, developers still seem to approach building distributed systems all wrong: We look through the lens of a single, relational, ACID, database. We also ignore the perils of asynchronous, unreliable networks. To wit, we do things like write fancy frameworks that keep us from having to know anything about the network (including RPC frameworks, database abstractions that also ignore the network) and try to implement everything with point-to-point synchronous invocations (REST, SOAP, other CORBA-like object serialization RPC libraries, etc.). We build systems without regard to authority vs. autonomy and end up trying to solve the distributed data problem with things such as two-phase commit across lots of independent services. Or we ignore these concerns altogether. This mindset leads to building brittle systems that don’t scale, and it doesn’t matter if you call it SOA, microservices, miniservices, or something else.

What do I mean by transactional boundaries? I mean the smallest unit of atomicity that you need with respect to the business invariants. Whether you use a database’s ACID properties to implement the atomicity or a two-phase commit doesn’t really matter. The point is to make these transactional boundaries as small as possible (ideally a single transaction on a single object) so we can scale. (Vernon Vaughn has a series of essays describing this approach with DDD Aggregates. ) When we build our domain model, using DDD terminology, we identify entities, value objects, and aggregates. Aggregates in this context are objects that encapsulate other entities/value objects and are responsible for enforcing invariants; there can be multiple aggregates within a bounded context.

For example, let’s say we have the following use cases:

- allow customers to search for flights,

- allow a customer to pick a seat on a particular flight,

- and allow a customer to book a flight.

We’d probably have three bounded contexts here: search, booking, and ticketing. (We’d also have others, such as payments, loyalty, standby, upgrades, and so on, but let’s focus on three). Search is responsible for showing flights for specific routes and itineraries for a given time frame (range of days, times, etc.). Booking will be responsible for teeing up the booking process with customer information (name, address, frequent flyer number, etc.), seat preferences, and payment information. Ticketing would be responsible for settling the reservations with the airline and issuing a ticket. Within each bounded context, we want to identify transactional boundaries in which we can enforce constraints/invariants. We will not consider atomic transactions across bounded contexts, which I will discuss in the next section.

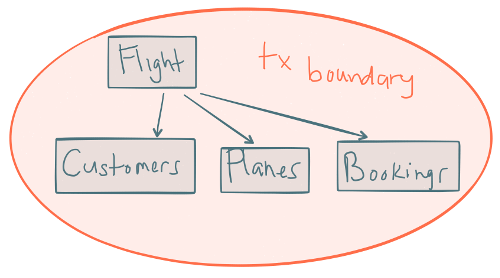

How would we model this, considering we want small transaction boundaries (a simplified version of booking a flight)? Maybe a flight aggregate that encapsulates values such as time, date, route, and entities such as customers, planes, and bookings? This seems to make sense—a flight has a plane, seats, customers, and bookings. The flight aggregate is responsible for keeping track of planes, seats, and so on for the purposes of creating bookings. This may make sense from a data-model standpoint inside of a database (nice relational model with constraints and foreign keys, etc.), or make a nice object model (inheritance/composition) in our source code, but let’s look at what happens.

Are there really invariants across all bookings, planes, flights, and so forth just to create a booking? That is, if we add a new plane to the flight aggregate, should we really include customers and bookings in that transaction? Probably not. What we have here is an aggregate built with compositional and data model conveniences in mind; however, the transactional boundaries are too big. If we have lots of changes to flights, seats, bookings, etc., we’ll have a lot of transactional conflicts (whether using optimistic or pessimistic locking won’t matter). And that obviously doesn’t scale—never mind failing orders all the time just because a flight schedule is changing being a terrible customer experience.

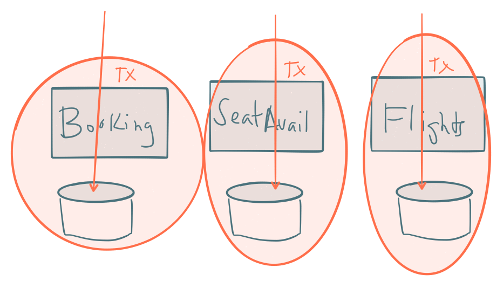

What if we broke the transactional boundaries a little smaller.

Maybe booking, seat availability, and flights are their own independent aggregates. A booking encapsulates customer information, preferences, and maybe payment information. The seat availability aggregate encapsulates planes and plane configurations. Flights aggregate is made up of schedules, routes, etc., but we can proceed with creating bookings without impacting transactions on flight schedules and planes/seat availability. From a domain perspective, we want to be able to do that. We don’t need 100% strict consistency across planes/flights/bookings, but we do want to correctly record flight schedule changes as an admin, plane configurations as a vendor, and bookings from customers. So how do we implement things such as “pick a particular seat” on a flight?

During the booking process, we may call into the seat availability aggregate and ask it to reserve a seat on a plane. This seat reservation would be implemented as a single transaction—for example, (hold seat 23A) and return a reservation ID. We can associate this reservation ID with the booking and submit the booking knowing the seat was at one point “reserved.” Each of these—reserve a seat, and accept a booking—are individual transactions and can proceed independently without any kind of two-phase commit or two-phase locking.

Note using a reservation here is a business requirement. We don’t do seat assignment here; we just reserve the seat. This requirement would need to be fettered out potentially through iterations of the model, because the language for the use case at first may simply say “allow a customer to pick a seat.” A developer could try to infer that the requirement means “pick from the remaining seats, assign this to the customer, remove it from inventory, and don’t sell more tickets than seats.” This would be extra, unnecessary invariants that would add additional burden to our transactional model, which the business doesn’t really hold as an invariant. The business is certainly okay taking bookings without complete seat assignments and even overselling the flight.

This is an example of allowing the true domain guide you toward smaller, simplified, yet fully atomic transactional boundaries for the individual aggregates involved. The story cannot end here though because we now have to rectify the fact that there are all these individual transactions that need to come together at some point. Different parts of the data are involved (i.e., I created a booking and seat reservations, but these are not settled transactions with regard to getting a boarding pass/ticket, etc.)

How should microservices communicate across boundaries?

We want to keep the true business invariants in tact. With DDD, we may choose to model these invariants as aggregates and enforce them using single transactions for an aggregate. There may be cases in which we’re updating multi-aggregates in a single transaction (across a single database or multiple databases), but those scenarios would be the exceptions. We still need to maintain some form of consistency between aggregates (and eventually between bounded contexts), so how should we do this?

One thing we should understand: Distributed systems are finicky. There are few guarantees (if any) we can make about anything in a distributed system in bounded time—things will fail, be non-deterministically slow or appear to have failed, systems have non-synchronized time boundaries, etc.—so why try to fight it? What if we embrace this and bake it into our consistency models across our domain? What if we say that between our necessary transactional boundaries, we can live with other parts of our data and domain to be reconciled and made consistent at a later point in time?

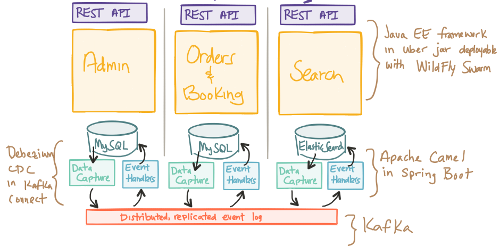

For microservices, we value autonomy. We value being able to make changes independent of other systems (in terms of availability, protocol, format, and so on). This decoupling of time and any guarantees about anything between services in any bounded time allows us to achieve this sort of autonomy (this is not unique to computer systems, or any systems for that matter). So I say, between transaction boundaries and between bounded contexts, use events to communicate consistency. Events are immutable structures that capture an interesting point in time that should be broadcast to peers. Peers will listen to the events in which they’re interested and make decisions based on that data, store that data, store some derivative of that data, update their own data based on a decision made with that data, and so on.

Continuing the flight booking example I started: When a booking is stored via an ACID-style transaction, how do we end up ticketing that? That’s where the aforementioned ticketing-bounded context comes in. The booking-bounded context would publish an event like NewBookingCreated, and the ticketing-bounded context would consume that event and proceed to interact with the back-end (potentially legacy) ticketing systems. This requires some kind of integration and data transformation, something Apache Camel would be great at.

How do we do a write to our database and publish to a queue/messaging appliance atomically? What if we have ordering requirements/causal requirements between our events? And what about one database per service?

Ideally, our aggregates would use commands and domain events directly (as a first class citizen—that is, any operation is implemented as commands, and any response is implemented as reacting to events) and we could more cleanly map between the events we use internally to our bounded context and those we use between contexts. We could just publish events (e.g., NewBookingCreated) to a messaging queue and then have a listener consume this from the queue and insert it idempotently into the database without having to use XA/2PC transactions, instead of inserting into the database ourselves. We could insert the event into a dedicated event store that acts like both a database and a messaging publish-subscribe topic (which is probably the preferred route). Or we can continue to use an ACID database and stream changes to that database to a persistent, replicated log, like Apache Kafka using something like Debezium and deduce the events using some kind of event processor/steam processor. Either way, we want to communicate between boundaries with immutable point in time events.

This comes with great advantages:

- We avoid expensive, potentially impossible transaction models across boundaries.

- We can make changes to our system without impeding the progress of other parts of the system (timing and availability).

- We can decide how quickly or slowly we want to see the rest of the outside world and become eventually consistent.

- We can store the data in our own databases however we’d like using the technology appropriate for our service.

- We can make changes to our schema/databases at our leisure.

- We become much more scalable, fault tolerant, and flexible.

- You have to pay even more attention to CAP Theorem and the technologies you chose to implement your storage/queues.

Notably, this comes with disadvantages:

- It’s more complicated.

- Debugging is difficult.

- Because we have a delay when seeing events, we cannot make any assumptions about what other systems know (which we cannot do anyway, but it’s more pronounced in this model).

- Operationalizing is more difficult.

- You must pay even more attention to CAP Theorem and the technologies you chose to implement your storage/queues.

I listed “paying attention to CAP, et al” in both columns because, although it places a bit more of a burden on us, doing so anyway is imperative. Also, paying attention to the different forms of data consistency and concurrency in our distributed data systems is essential. Relying on “our database in ACID” is no longer acceptable (especially when that ACID database most likely defaults to weak consistency anyway).

Another interesting concept that emerges from this approach is the ability to implement a pattern known as Command Query Separation Responsibility in which we separate our read model and our write models into separate services. Remember we lamented the internet companies don’t have complex domain models. This is evident in their write models being simple (insert a tweet into a distributed log, for example). Their read models, however, are crazy complicated because of their scale. CQRS helps separate these concerns. On the flip side, in an enterprise, the write models might be incredibly complicated whereas the read models may be simple flat select queries and flat DTO objects. CQRS is a powerful separation of concerns pattern to evaluate once you’ve got proper boundaries and a good way to propagate data changes between aggregates and between bounded contexts.

What about a service that has only one database and doesn’t share with any other service? In this scenario, we may have listeners that subscribe to the stream of events and may insert data into a shared database that the primary aggregates might end up using. This “shared database” is perfectly fine. Remember there are no rules, just tradeoffs. In this instance, we may have multiple services working in concert together with the same database, and so long as we (our team) own all the processes, we don’t negate any of our advantages of autonomy. Thus, when you hear someone say, “A microservice should have its own database and not share it anyone else,” you can respond, “Well, kinda.”

What if we just turn the database inside out?

What if we take the concepts in the previous section to its logical extreme? What if we just say we’ll use events/streams for everything and also persist these events forever? What if we say databases/caches/indexes are really just materialized views of a persistent log/stream of events that happened in the past, and the current state is a left fold over all of those events?

This approach brings even more benefits that you can add to the benefits of communicating via events (listed above):

- Now you can treat your database as a “current state” of record, not the true record.

- You can introduce new applications and re-read the past events and examine their behaviors in terms of what would have happened.

- You can perfect audit logging for free.

- You can introduce new versions of your application and perform exhaustive testing on it by replaying the events.

- You can more easily reason about database versioning/upgrades/schema changes by replaying the events into the new database.

- You can migrate to completely new database technology (e.g., maybe you find you’ve outgrown your relational DB and you want to switch to a specialized database/index).

For more information on this, read “Turning the database inside-out with Apache Samza”, by Martin Kleppmann.

When you book a flight on aa.com, delta.com, or united.com, you’re seeing some of these concepts in action. When you choose a seat, you don’t actually get assigned it—you reserve it. When you book your flight, you don’t actually have a ticket—you get an email later telling you you’ve been confirmed/ticketed. Have you ever had a reservation change and been assigned a different seat for the flight? Or been to the gate and heard them ask for volunteers to give up seats because the flight is oversold? These are examples of transactional boundaries, eventual consistency, compensating transactions, and even apologies at work.

Conclusion

Data, data integration, data boundaries, enterprise usage patterns, distributed systems theory, timing, and so on are all the hard parts of microservices (because microservices is really just distributed systems). I’m seeing too much confusion around technology (“If I use Spring Boot I’m doing microservices; I need to solve service discovery, load balancing in the cloud before I can do microservices”, “I must have a single database per microservice“) and useless rules regarding microservices.

Don’t worry. Once the big vendors have sold you all the fancy suites of products, you’ll still be left to do the hard parts I’ve outlined in this article.

[ad_2]

Source link